In the early days (and still), visualizers often modified the color palette in Windows directly to achieve some pretty cool effects.

#Song visualizer program update#

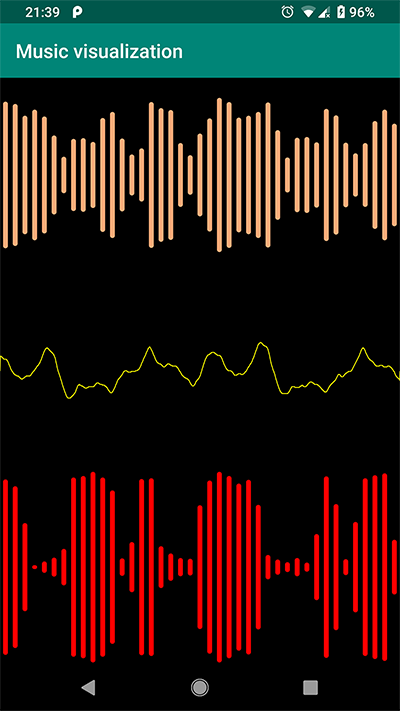

Generally, the graphics methods have to be extremely fast and lightweight in order to update the visuals in time with the music (and not bog down the PC). How the visual display is updated in response to the frequency info is up to the programmer. The visualizer does a Fourier transform on each slice, extracting the frequency components, and updates the visual display using the frequency information.

It's all up to your imagination what to do with them.ĭisplay a picture, multiply the size by the bass for example - you'll get a picture that'll zoom in on the beat, etc.Īs a visualizer plays a song file, it reads the audio data in very short time slices (usually less than 20 milliseconds). Once you have some values for for example bass, midtones, treble and volume(left and right), If you want to read about beat/tempo-detection google for Masataka Goto, he's written some interesting papers about it. (Paul Bourke is a name you want to google anyway, he has a lot of information about topics you either want to know right now or probably in the next 2 years ))

If you're accustomed to math you might want to read Paul Bourke's page : For creating BeatHarness ( ) I've 'simply' used an FFT to get the audiospectrum, then use some filtering and edge / onset-detectors.

0 kommentar(er)

0 kommentar(er)